Tome's Land of IT

IT Notes from the Powertoe – Tome Tanasovski

ForEach-Parallel

Posted by on May 3, 2012

I just came back from the PowerShell Deep Dive at TEC 2012. A great experience, by the way. I highly recommend it to everyone. Extremely smart and passionate people who could talk about PowerShell for days along with direct access to the PowerShell product team!

During this summit, workflows were a topic of conversation. If you have looked at workflows, there is one feature that generally catches the eye – I know it caught mine the first time I saw it – ForEach-Parallel. Unfortunately, when you dig into what it’s doing you come to learn that it is not a solution for multithreading in PowerShell. Nope, it’s extremely slowwwwwwwwwwwwwww. If you’re like me, parallel processing is key to getting some enterprise-class scripts to run faster. You may have played with jobs before, but even they have some overhead that causes them to slow down. Running scripts side by side works, but requires you to engineer the scripts in a way that they can be called like that. So what is the best way to run something like a loop of data across four threads? The answer is runspaces and runspace pooling.

function ForEach-Parallel {

param(

[Parameter(Mandatory=$true,position=0)]

[System.Management.Automation.ScriptBlock] $ScriptBlock,

[Parameter(Mandatory=$true,ValueFromPipeline=$true)]

[PSObject]$InputObject,

[Parameter(Mandatory=$false)]

[int]$MaxThreads=5

)

BEGIN {

$iss = [system.management.automation.runspaces.initialsessionstate]::CreateDefault()

$pool = [Runspacefactory]::CreateRunspacePool(1, $maxthreads, $iss, $host)

$pool.open()

$threads = @()

$ScriptBlock = $ExecutionContext.InvokeCommand.NewScriptBlock("param(`$_)`r`n" + $Scriptblock.ToString())

}

PROCESS {

$powershell = [powershell]::Create().addscript($scriptblock).addargument($InputObject)

$powershell.runspacepool=$pool

$threads+= @{

instance = $powershell

handle = $powershell.begininvoke()

}

}

END {

$notdone = $true

while ($notdone) {

$notdone = $false

for ($i=0; $i -lt $threads.count; $i++) {

$thread = $threads[$i]

if ($thread) {

if ($thread.handle.iscompleted) {

$thread.instance.endinvoke($thread.handle)

$thread.instance.dispose()

$threads[$i] = $null

}

else {

$notdone = $true

}

}

}

}

}

}

With that function, you can do things like this:

(0..50) |ForEach-Parallel -MaxThreads 4{

$_

sleep 3

}

You’ll notice that the above causes batches of four to run simultaneously. Actually, it looks like the data is running serially, but it’s really in parallel. A better example is something like this that simulates that some processes take longer than others:

(0..50) |ForEach-Parallel -MaxThreads 4{

$_

sleep (Get-Random -Minimum 0 -Maximum 5)

}

Mind you, parallel processing doesn’t always make things faster. For example, if your CPU consumption per thread is more than your box can handle, you may be adding latency due to scheduling of the CPU. Another example is that if it’s not a long running process that you are performing in your loop, the overhead for starting up multiple threads could make your script slower. Just use your head and play with it. In the right place at the right time, this is an absolute lifesaver.

Note: I learned this technique from Dr. Tobias Weltner, but for some reason I can’t find the link to the video where he discussed it.

PowerShell Studio 2012 – vNext for Primal Forms

Posted by on April 1, 2012

I have just returned from the amazing lineup of PowerShell sessions at the NYC Techstravaganza. Sapien happened to sponsor the PowerShell track. This gave us the opportunity to hear what the company has been up to directly from their CEO, Dr. Ferdinand Rios. I should note, not only did we get updates about their 2012 products, but we were handed USB keychains that were fully loaded with beta software!

The session brought us through the updates that Sapien has made to iPowerShell, their iOS app and PrimalScript. Both had a whole set of new features, but it was the news about Primal Forms that I thought was worth blogging about. Here are some of the new features we saw (this is probably not a comprehensive list – it’s just the items that raised my eyebrow during the session):

Primal Forms is now called PowerShell Studio 2012

This makes a lot of sense to me. It is a name that more appropriately tells what Primal Forms is. It’s not only a full-fledged winform developing environment for PowerShell, but it’s also a fairly robust integrated scripting environment (ISE). The only downside is that there is already a codeplex project with this name. It’s sure to spin up some conflict or debate.

Layout Themes

One thing is clear when working with Primal Forms 2011, you definitely don’t want/use all of the panes that you have open all the time. When working on forms, you need a whole different layout than you need when you want to just work on a simple script. Layouts can now be switched rapidly via a control in the bottom left. These layouts continue to happen automatically, but you can also control them manually.

Font Size Slider

This is a slider that will change the size of the fonts in your script window. I use this slider all the time in powershell_ise.exe when giving demos. I’m glad this simple change is now in the app.

Function Explorer

This one is cool. There is a pane that will allow you to quickly click between events and functions in your projects. It’s dynamically built. Obviously, it’s really neat when trudging through the complex structure of a scripted winform, but I am finding that it’s really cool for large modules too.

Change-in-code Indicator

There is an indicator between your code and the line numbers that are triggered during a change to your code. If you open a script, and then make a change to that script, a yellow indicator shows that this has been changed:

Once you save the file, the indicator turns green:

If you open the script again, the indicator resets to not being there.

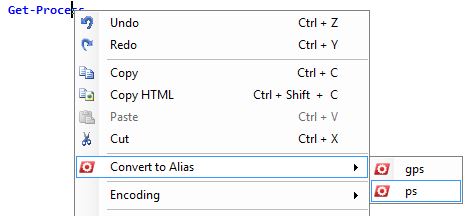

Toggle a Cmdlet to and from an alias

Apparently, you could always toggle an entire script to remove all aliases. I was not aware of this. Regardless, you can now right click on a cmdlet or alias to toggle between the cmdlet and its aliases.

Cmdlets have a ‘Convert to Alias’ context menu:

Aliases have an ‘Expand to Cmdlet’ context menu.

I should note that as of the beta you can convert to the alias foreach or % in place of Foreach-Object, but you cannot expand it back to a cmdlet.

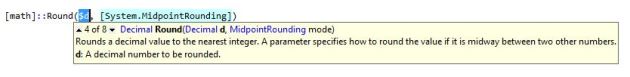

Tab Completion of .NET Methods

Neat-o feature. When you enter a method, you get a helper window to tell you the overloaded options. You can press up and down (or click up and down in the intellisense helper) to select the appropriate parameter set that you plan to use:

Once you have found the right method, you can press tab (like the helper says) to autofill the method’s parameters. This is really nice with classes that use an enumerator. It saves you from having to type out the entire class name. For example, the next image shows that it has typed the entire [System.MidpointRounding] for me.

It goes a bit further too. As you can see above, a variable name is created and highlighted. You can immediately start typing another variable or decimal. Once you are done entering that parameter, you hit tab to go to the next one. In the case of an enumerator, like the one above, it lets you select the item in the enumerator you would like to use. This is handled via another intellisense helper that you can quickly move to with the up and down arrows. It even gives you information about what the item does:

Control Sets

This is the one that matters! This is the promise of scripted GUIs in my opinion.

You can package sets of common form controls, events, and functions as a control set. This gives you an easy way to add something to your form that is a complete thing (for lack of a better word) via drag and drop. For example,

- You can add text boxes that have validation pre-configured so that it will validate whether or not you have an e-mail address or phone number.

- You can add charts that automatically pull data from a specific cmdlet.

- You can add buttons that run background processes or jobs that include status bars and indicators that help the end-user understand what is happening.

- You can also include a quick textbox that will automatically have a button with an associated file-dialogue box that will populate the textbox.

Here is a list of the control sets that are in the beta:

I should note that the “TextBox – Validate IP” is one that I created this morning during breakfast. I was floored by how easy it was to do (with some knowledge of winforms). Actually, not only easy, but they give you the ability to utilize shared controls between the control sets. In other words, if you already have an appropriate ErrorProvider object that can serve the validation for your TextBoxes, it will use that object rather than creating a new one if you tell the wizard that it can do so. I will be blogging a detailed tutorial on how to do this today or tomorrow.

Debugging a Script that uses Remoting

Okay, this is the final feature. The demo we saw did not show this. Also, the beta version we received does not yet have it. However, the promise was made in Ferdinand’s last slide. When PowerShell Studio 2012 ships sometime in the Spring, it will have a remote debugger.

Open a file in PowerShell ISE via cmdlet – Version 3 Update

Posted by on April 1, 2012

A while back I posted an article that discusses a cmdlet I created that opens up text files in powershell ISE. It’s fairly robust: It accepts pipeline, wildcards, handles multiple files, etc. I was just transferring my profile over to my Windows 8 Server computer, and I decided to revisit the script for PowerShell ISE in version 3.0 of PowerShell.

If you are not aware, powershell_ise.exe now accepts a new parameter called -File :

powershell_ise.exe -help

The way that -File works is better than I expected. It not only opens up a PowerShell ISE window, and then opens the file in it, but it will inject the file into a PowerShell ISE window if it is already open. Here is the relevant bit of code to launch powershell_ise.exe from a powershell.exe host.

# $files is an array that contains the full path to every file that will be opened

start powershell_ise.exe -ArgumentList ('-file',($files -join ','))

You can download the full script on PoshCode.

Powerbits #8 – Opening a Hyper-V Console From PowerShell

Posted by on March 13, 2012

I am right now Windows Server 8 and PowerShell 3 Beta obsessed. I want to blog – I want to blog – I want to blog, but I’m trying to hold back a lot of it until we see how everything shakes out. I’m running Serer 8 beta on my new Asus ultrabook. Because of this, I’m also running a ton of Hyper-V VMs on my fancy type-1 hypervisor in order to play with the new features in PowerShell 3 such as PowerShell Web Access and disconnected PSSessions. I love the autoload of the Hyper-V module when I do something like Get-VM, but I was really disappointed that there was no cmdlet to open up a console session for one of my VMs. I mean, I have no interest in loading up a GUI for Hyper-V to do this. A bit of quick research led me to vmconnect.exe.

So, without further ado, here’s a quick wrapper that will let you open up Hyper-V console sessions directly from PowerShell. This is now permanently in my profile:

function Connect-VM {

param(

[Parameter(Mandatory=$true,Position=0,ValueFromPipeline=$true)]

[String[]]$ComputerName

)

PROCESS {

foreach ($name in $computername) {

vmconnect localhost $name

}

}

}

Now, you can either do something like

Connect-VM server2

or

'server2','server3'|connect-vm

Finally, if you are running PowerShell 3.0, you can do the following:

(get-vm).name |Connect-VM

Scripting Games 2012

Posted by on March 13, 2012

If you’re not aware by now, the Scripting Games will begin on April 2cnd. Sure, it’s an opportunity to put your skills to the test. However, more important than that is that it is an opportunity to receive constructive feedback about how to make your scripts better. I am honored to help out in the judging again for the third year in a row, and I look forward to putting in some time to rank, review, and critique your hard work.

If you’re not aware by now, the Scripting Games will begin on April 2cnd. Sure, it’s an opportunity to put your skills to the test. However, more important than that is that it is an opportunity to receive constructive feedback about how to make your scripts better. I am honored to help out in the judging again for the third year in a row, and I look forward to putting in some time to rank, review, and critique your hard work.

For more info check out the 2012 Windows PowerShell Scripting Games: All Links on One Page

See Tome Speak!

Posted by on March 8, 2012

I’ve been very busy, and I keep getting busier. In the next few months I’m doing quite a bit of speaking. You can see me at the following:

I’ve been very busy, and I keep getting busier. In the next few months I’m doing quite a bit of speaking. You can see me at the following:

I’ll be doing a talk entitled, “What’s New in PowerShell V3” on March 30th for the NYC Techstravaganza.

I’ll be doing two shorter talks entitled, “Building a PowerShell Corporate Module Repository” and “Pinvoke – When Old APIs Save the Day” for The Experts Conference (TEC 2012) in San Diego, April 29th – May 2cnd.

I’ll also be using the NYC PowerShell User Group regular meeting on April 9th as an opportunity to give myself a dress rehearsal for TEC.

If you follow my blog, and happen to be at one of those events, I look forward to meeting you. Make sure to introduce yourself. I promise I don’t bite, but I have been known to talk about PowerShell for hours on end.

What’s Big in 2012?

Posted by on January 31, 2012

A friend of mine asked me an innocent enough pair of questions, “What’s going to be big in 2012?” … “What’s worth learning?” I approach these questions cautiously. In most organizations 2012 is a year of finishing up projects: mainly win7, virtual desktops, or both. You should have a good handle on application delivery or at least a strategy for how to handle it in the years to come. You’ve probably spent some time looking and perhaps implementing some of the layering technologies that can be used with VDI. For many, that is still the focus area. Some of you are putting focus back into Citrix for published desktops or for app delivery as people bring their devices to work. Finally, a few of you are evaluating the multitude of cloud services that are now available that may act as a better alternative to what you can do in your own house. Others are still scratching their heads as they try to figure out what a private cloud means to them.

Rather than be the pundit on a pulpit making predictions, I’ll just tell you what I plan on doing this year.

What’s in your lineup for 2012, Tome?

Glad you asked. First and foremost this is obviously the year that Microsoft wants me to get up to speed with Windows 8 and System Center 2012.

Windows 8

I’m not talking desktop here. I’m talking server! Metro apps are aesthetically in this millenium, which is nice. However, the pieces of meat that interest me have nothing to do with HTML 5, fake suspended apps that instantly recreate state, or the way search works. Nope, the interesting stuff is definitely in server. There is a new file system (REFS), extensions on existing ones (NTFS with dedupe), tons of new cmdlets, a direction from the Server team to go all server core (the command line only version of Windows Server), VHD improvements (vhdx), virtual aware DCs, Active Directory Admin Center (ADAC), and who knows what else I’m about to learn this year when I hit this topic hard.

Hyper-V

I’m finally ready to learn everything I can about this hypervisor. I know ESX very well and I love it. I’ve had plenty of opportunities to have tongue-in-cheek comments like, “I think Microsoft just announced that they invented v-motion” or “Hey, look at this: Hyper-V can overcommit memory now”. Now the feature set is full, and Microsoft is starting to use the technology in ways that are integrated into other products – like …. wait for it … Private Cloud

System Center 2012

Hand in hand with Hyper-V are some of the components within System Center. I think the best way to put it is that if it runs the private cloud infrastructure, I plan on trying it out. While the term cloud is an overused word that describes development best practices for the past few years, the mainstream adoption and flexibility of virtualization have made this a sexy term. What I believe Microsoft is offering (I will know better once I start playing with it) is their new application framework. I believe that their hope is that if IT Pros can easily create and set up Azure-like services, that it will be easier to convince developers to develop on those services. This, of course, means it’s much easier to then push those services to the public cloud at a later date – perhaps due to in-demand bursts, perhaps due to outsourcing, perhaps just for the sexiness of it.

Big Data

I spent a lot of time near the end of last year getting up to speed on some of the big data options. If you’re not familiar with the term, let’s just say that it’s quantities of data that would make a relational database feel uncomfortable while maintaining the querying speed of a relational database – in most cases it is faster due to the fact that you are leveraging multiple servers to pull back data in parallel streams. I have played with some simple nosql data stores like mongodb and Cassandra, and I have tackled some map reduce with Hadoop, couchdb, and Splunk.

Splunk

Splunk is where I am committing my time at the moment. Splunk allows you to ingest time-series data from disparate sources, but that’s only the beginning. The power is twofold: First, in their slick query language that lets you spin real-time slices of that data in ways that were traditionally only possible with a data architect and a defined cube of your data sets. Second, in their ability to combine and reconcile data events from the multiple sources that you feed it. The fact that they require very little setup (compared to the other options) to get going makes them the easiest way that I know of to implement a big data solution without a lot of heavy developer work. This relatively simple time-to-implement has also enabled them to adopt this ridiculous pricing model that is horrible for us, but is sure to keep them in business long enough to keep their competitive edge.

1010Data

Also, I’m keeping an eye on 1010data. They are a business-driven solution that enables people to get the same slicing and dicing power that you can get from Splunk without requiring a data architect, but the solution is 100% cloud based and uses an online spreadsheet to empower their users. It currently acts as the data warehouse for NYSE – they store every historical trade in their trillion row spreadsheet. This is a much different beast than Splunk. Splunk is right now the IT Pro’s tool for managing the sprawl of machine data. 1010data is all about number crunching and speed. Splunk enables IT Pros and Devs, while 1010data enables business. Both of these are extremely valuable things to understand if you plan on designing the future vision of IT in your environment.

Teradata Aster

I’m really curious to see what Teradata‘s aquisition of Aster bears. I’m not overly up to speed with Teradata to begin with, but I know it’s a “hot” product that I should know. The fact that Aster provides map reduce capability to the Teradatadata data store makes my eyebrow rise a bit higher. I’m committed to some more research – I expect that will be conversations with people in that area and a lot of reading more than anything practical.

PowerShell

How could I talk about 2012 without PowerShell. V3 was so Q4 of 2011, but I am waiting for the next CTP or beta release to see what is making the cut and what will not. Soon it will be time to actually use V3 in production – now that’s when it gets fun.

Additionally on the PowerShell front, I’ve been hitting a series of AI/Collective Intelligence algorithms hard the past few months to see what I can use them for in PowerShell. This is more of an academic pursuit than anything else although it was directly spawned from a task I was handed at work. The plus for the rest of the world is that I’ve been developing a fairly nice suite of cmdlets that can implement the various algorithms. I hope to release this as a module in 2012.

Comments

What do you think is hot in 2012? What am I missing? Drop a line in the comments here or on Google+

Powerbits #7 – Copying and Pasting a List of Computers into a PowerShell Script as a Collection

Posted by on January 23, 2012

It’s quite common to receive an e-mail from someone that has a list of computers that need a script run against it – or perhaps a list of perfmon counters that need to be collected – or even a list of usernames that someone needs you to pull from AD in order to create a custom report about the user and his attributes. There are a few ways to put this data into your scripts. Probably the most common method I have seen is to put this data into a file and run Get-Content file.txt. This works fine, but I generally just need to do a bit of one-off scripting and want to bang it out quick. When that happens I throw the list into a here string and break it up with the -split operator:

$computers = @" Computer1 Computer2 Computer3 Computer4 "@ -split '\r\n'

This creates a collection of strings where each line has its own string.

PS C:\> $computers.gettype() IsPublic IsSerial Name BaseType -------- -------- ---- -------- True True String[] System.Array

Now you can throw this collection into a foreach loop

foreach ($computer in $computers) {

#Do something to $computer

}

or better yet you can throw it at a parameter that takes a collection like Invoke-Command

Invoke-Command -Computername $computers -Scriptblock {Get-Process}

Nice! I just spanned out Get-Process to the four computers in my list!

V3 ISE Colors

Posted by on January 13, 2012

I have a love/hate relationship with the new ISE. I’ll spare any discussion on the topic until after the CTP is no longer a CTP. In the meantime, I’ll just leave you with a quick script to help you if you are as resistant to change as I am with my beloved ISE.

In the new ISE the output pane and command pane are one. They happen to look like this:

For my eyes, I prefer the go-lightly look of the v2 ISE. If you feel the same way, you can have everything you want by running the following script:

$psise.Options.OutputPaneBackgroundColor = "#FFF0F8FF"

$psise.Options.OutputPaneForegroundColor = "#FF000000"

$psise.Options.OutputPaneTextBackgroundColor = "#FFF0F8FF"

foreach ($key in ($psise.Options.ConsoleTokenColors.keys |%{$_.tostring()})) {

$color = $psise.Options.TokenColors.Item($key)

$newcolor = [System.Windows.Media.Color]::FromArgb($color.a,$color.r,$color.g,$color.b)

$psise.Options.ConsoleTokenColors.Item($key) = $newcolor

}

Now doesn’t that look better – I mean more familiar 🙂 The above applies the script pane’s token colors to the command pane. Token colors are new to the command pane in V3.

Two additional notes:

1) If you want to restore back to the original look and feel that was shipped, you can use the following:

$psise.Options.RestoreDefaults() $psise.Options.RestoreDefaultConsoleTokenColors()

2) Everything in this article can now be done in ISE via their new color themes, but again let’s wait to see what happens during RTM. However, you can play with it yourself for now by clicking Tools->Options… and then clicking the Colors and Fonts tab in ISE.

Out-GridView Now Has a PassThru Parameter

Posted by on September 19, 2011

So CTP 1 for PowerShell v3 was released. Go get it now. I will use another hash tag for this called v3CTP1 so that I can quickly fix these posts when I go back after V3 is RTM.

So, with so much to talk about, I’m going to show something simple that I think is madly madly cool! Out-GridView now has a PassThru parameter. What does it mean, what does it mean?

Try the following:

Get-Process |Out-GridView -PassThru |Select Name

You’ll see your normal Out-GridView, but you’ll also see an OK and Cancel button int he bottom right.

What does it mean?

Filter the contents of the gridview to display things you are interested in, then click on a few rows. When you have selected a few, click OK! You have just passed the objects that you are interested in through the pipeline to the next cmdet which happens to be Select Name. What an amazing cmdlet! The ability to have the operator of your script use the filtering and sorting of Out-GridView to choose data that should be processed!